RAG Evaluations

RAG (retrieval augmented generation) has become one of the most popular applications of LLMs today. In this tutorial, we will demonstrate how to evaluate the performance of an RAG application using LangSmith.

Overview

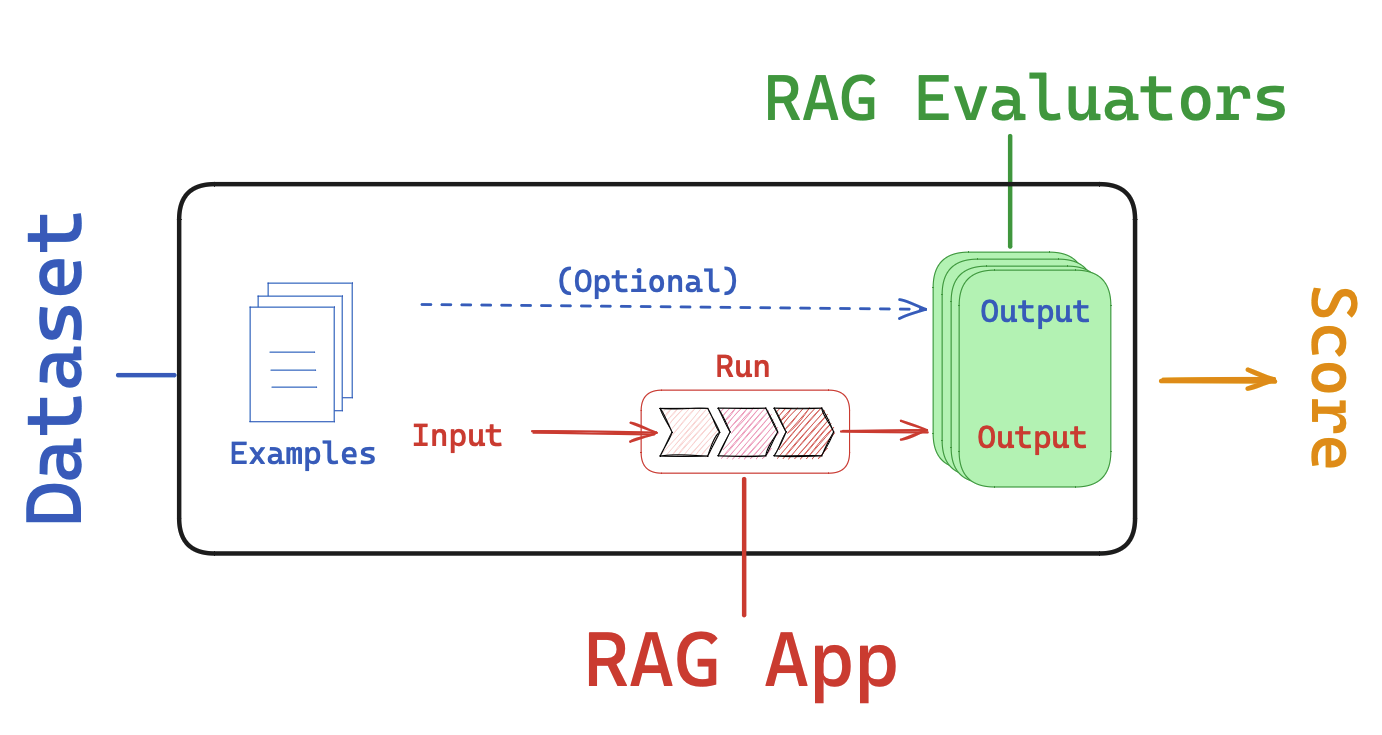

Evaluating RAG applications involves creating a dataset, running your RAG application, and then evaluating the performance of your application using different types of evaluators.

Setup

First, let's set our environment variables:

import os

os.environ["LANGCHAIN_API_KEY"] = "YOUR LANGCHAIN API KEY"

os.environ["LANGCHAIN_TRACING_V2"] = "true"

os.environ["OPENAI_API_KEY"] = "YOUR OPENAI API KEY"

Dataset

We are going to create a dataset from Lilian Weng's blogposts to use RAG on. Note that if you already have a dataset you want to test on, you can skip this step.

from langsmith import Client

client = Client()

# Create examples for the dataset

examples = [

(

"How does the ReAct agent use self-reflection? ",

"ReAct integrates reasoning and acting, performing actions - such tools like Wikipedia search API - and then observing / reasoning about the tool outputs.",

),

(

"What are the types of biases that can arise with few-shot prompting?",

"The biases that can arise with few-shot prompting include (1) Majority label bias, (2) Recency bias, and (3) Common token bias.",

),

(

"What are five types of adversarial attacks?",

"Five types of adversarial attacks are (1) Token manipulation, (2) Gradient based attack, (3) Jailbreak prompting, (4) Human red-teaming, (5) Model red-teaming.",

)

]

# Save the examples to LangSmith

dataset_name = "RAG Agent Evaluation"

dataset = client.create_dataset(dataset_name=dataset_name)

inputs, outputs = zip(

*[({"input": text}, {"output": label}) for text, label in examples]

)

client.create_examples(inputs=inputs, outputs=outputs, dataset_id=dataset.id)

RAG application

For this tutorial we are using LangChain, but LangSmith works regardless of whether or not your pipeline is built with LangChain.

Now we can define our RAG application. Again, if you already have a RAG application, you can skip this step. We will be using a fairly simple RAG app for this tutorial but there are plenty of ways you can improve on it such as introducing extra reasoning steps, adding feedback loops, etc.

Vector Store and Retriever

First, let's define our vector store and retriever, grabbing the blog posts that our questions/answer pairs are based on.

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.document_loaders import WebBaseLoader

from langchain_community.vectorstores import SKLearnVectorStore

from langchain_openai import OpenAIEmbeddings

# List of URLs to load documents from

urls = [

"https://lilianweng.github.io/posts/2023-06-23-agent/",

"https://lilianweng.github.io/posts/2023-03-15-prompt-engineering/",

"https://lilianweng.github.io/posts/2023-10-25-adv-attack-llm/",

]

# Load documents from the URLs

docs = [WebBaseLoader(url).load() for url in urls]

docs_list = [item for sublist in docs for item in sublist]

# Initialize a text splitter with specified chunk size and overlap

text_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder(

chunk_size=250, chunk_overlap=0

)

# Split the documents into chunks

doc_splits = text_splitter.split_documents(docs_list)

# Add the document chunks to the "vector store" using OpenAIEmbeddings

vectorstore = SKLearnVectorStore.from_documents(

documents=doc_splits,

embedding=OpenAIEmbeddings(),

)

retriever = vectorstore.as_retriever(k=4)

Application

We can now define the application by creating functions to retrieve docs, invoke the LLM, and get the answer.

import openai

from langsmith import traceable

from langsmith.wrappers import wrap_openai

class RagBot:

def __init__(self, retriever, model: str = "gpt-4-0125-preview"):

self._retriever = retriever

# Wrapping the client instruments the LLM

self._client = wrap_openai(openai.Client())

self._model = model

@traceable()

def retrieve_docs(self, question):

return self._retriever.invoke(question)

@traceable()

def invoke_llm(self, question, docs):

response = self._client.chat.completions.create(

model=self._model,

messages=[

{

"role": "system",

"content": f"""You are an assistant for question-answering tasks.

Use the following documents to answer the question.

If you don't know the answer, just say that you don't know.

Use three sentences maximum and keep the answer concise.

Documents: {docs}

Answer:""",

},

{

"role": "user",

"content": question,

},

],

)

# Evaluators will expect "answer" and "contexts"

return {

"answer": response.choices[0].message.content,

"contexts": [str(doc) for doc in docs],

}

@traceable()

def get_answer(self, question: str):

docs = self.retrieve_docs(question)

return self.invoke_llm(question, docs)

rag_bot = RagBot(retriever)

Predictors

We can now define predictor functions to call, which will:

- Take a dataset

example - Extract the relevant key (e.g.,

question) from theexample - Pass it to the RAG chain

- Return the relevant output values from the RAG chain (either just the answer, or the asnwer and the context)

def predict_rag_answer(example: dict):

"""Use this for answer evaluation"""

response = rag_bot.get_answer(example["input_question"])

return {"answer": response["answer"]}

def predict_rag_answer_with_context(example: dict):

"""Use this for evaluation of retrieved documents and hallucinations"""

response = rag_bot.get_answer(example["input_question"])

return {"answer": response["answer"], "contexts": response["contexts"]}

Evaluator

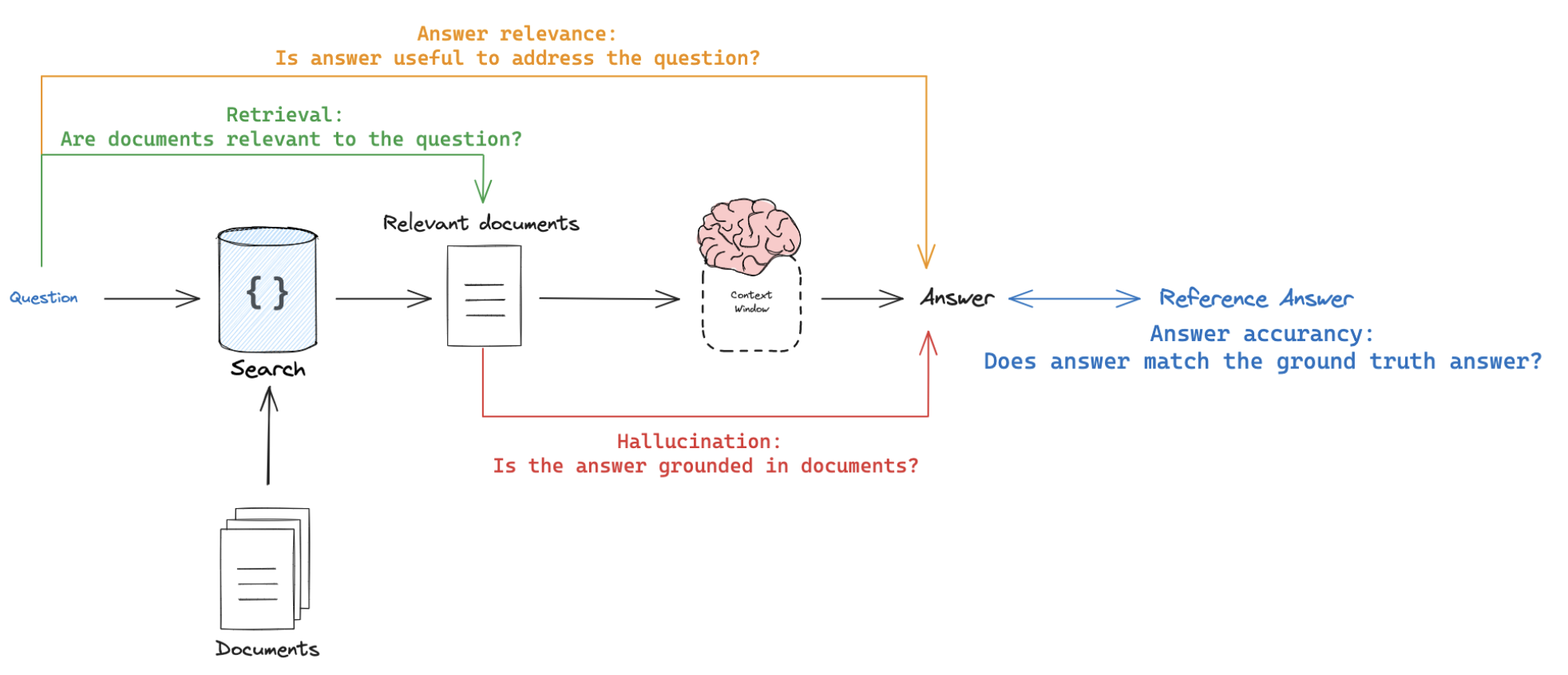

There are at least 4 types of RAG eval that users are typically interested in.

- Response vs reference answer

Goal: Measure "how similar/correct is the RAG chain answer, relative to a ground-truth answer"Mode: Uses ground truth (reference) answer supplied through a datasetJudge: Use LLM-as-judge to assess answer correctness.

- Response vs input

Goal: Measure "how well does the generated response address the initial user input"Mode: Reference-free, because it will compare the answer to the input questionJudge: Use LLM-as-judge to assess answer relevance, helpfulness, etc.

- Response vs retrieved docs

Goal: Measure "to what extent does the generated response agree with the retrieved context"Mode: Reference-free, because it will compare the answer to the retrieved contextJudge: Use LLM-as-judge to assess faithfulness, hallucinations, etc.

- Retrieved docs vs input

Goal: Measure "how good are my retrieved results for this query"Mode: Reference-free, because it will compare the question to the retrieved contextJudge: Use LLM-as-judge to assess relevance

Response vs reference answer

Here is an example prompt that we can use:

https://smith.langchain.com/hub/langchain-ai/rag-answer-vs-reference

Here is the a video from our LangSmith evaluation series for reference:

https://youtu.be/lTfhw_9cJqc?feature=shared

Here is our evaluator function:

runis the invocation ofpredict_rag_answer, which has keyanswerexampleis from our eval set, which has keysinput_questionandoutput_answer- We extract these values and pass them into our grader

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.messages import HumanMessage, SystemMessage

from langchain_openai import ChatOpenAI

from pydantic import BaseModel, Field

class Grade(BaseModel):

Score: int = Field(..., description="Provide the score on whether the answer is correct")

Explanation: str = Field(..., description="Explain your reasoning for the score")

# Grade prompt

grade_prompt_answer_accuracy_messages = [

SystemMessage(

content="""You are a teacher grading a quiz.

You will be given a QUESTION, the GROUND TRUTH (correct) ANSWER, and the STUDENT ANSWER.

Here is the grade criteria to follow:

(1) Grade the student answers based ONLY on their factual accuracy relative to the ground truth answer.

(2) Ensure that the student answer does not contain any conflicting statements.

(3) It is OK if the student answer contains more information than the ground truth answer, as long as it is factually accurate relative to the ground truth answer.

Score:

A score of 1 means that the student's answer meets all of the criteria. This is the highest (best) score.

A score of 0 means that the student's answer does not meet all of the criteria. This is the lowest possible score you can give.

Explain your reasoning in a step-by-step manner to ensure your reasoning and conclusion are correct.

Avoid simply stating the correct answer at the outset."""

),

HumanMessage(

content="""QUESTION: {{question}}

GROUND TRUTH ANSWER: {{correct_answer}}

STUDENT ANSWER: {{student_answer}}"""

),

]

grade_prompt_answer_accuracy = ChatPromptTemplate(grade_prompt_answer_accuracy_messages)

def answer_evaluator(run, example) -> dict:

"""

A simple evaluator for RAG answer accuracy

"""

# Get question, ground truth answer, RAG chain answer

input_question = example.inputs["input_question"]

reference = example.outputs["output_answer"]

prediction = run.outputs["answer"]

# LLM grader

llm = ChatOpenAI(model="gpt-4-turbo", temperature=0)

grade_answer_accuracy_llm = llm.with_structured_output(Grade)

# Structured prompt

answer_grader = grade_prompt_answer_accuracy | grade_answer_accuracy_llm

# Run evaluator

score = answer_grader.invoke({"question": input_question,

"correct_answer": reference,

"student_answer": prediction})

score = score["Score"]

return {"key": "answer_v_reference_score", "score": score}

Now, we kick off evaluation:

predict_rag_answer: Takes anexamplefrom our eval set, extracts the question, passes to our RAG chainanswer_evaluator: Passes RAG chain answer, question, and ground truth answer to an evaluator

from langsmith import evaluate

experiment_results = evaluate(

predict_rag_answer,

data=dataset_name,

evaluators=[answer_evaluator],

experiment_prefix="rag-answer-v-reference",

metadata={"version": "LCEL context, gpt-4-0125-preview"},

)

Response vs input

Here is an example prompt that we can use:

https://smith.langchain.com/hub/langchain-ai/rag-answer-helpfulness

The information flow is similar to above, but we simply look at the run answer versus the example question.

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.messages import HumanMessage, SystemMessage

from langchain_openai import ChatOpenAI

from pydantic import BaseModel, Field

class Grade(BaseModel):

Score: int = Field(..., description="Provide the score on whether the answer addresses the question")

Explanation: str = Field(..., description="Explain your reasoning for the score")

# Grade prompt

grade_prompt_answer_helpfulness_messages = [

SystemMessage(

content="""You are a teacher grading a quiz.

You will be given a QUESTION and a STUDENT ANSWER.

Here is the grade criteria to follow:

(1) Ensure the STUDENT ANSWER is concise and relevant to the QUESTION

(2) Ensure the STUDENT ANSWER helps to answer the QUESTION

Score:

A score of 1 means that the student's answer meets all of the criteria. This is the highest (best) score.

A score of 0 means that the student's answer does not meet all of the criteria. This is the lowest possible score you can give.

Explain your reasoning in a step-by-step manner to ensure your reasoning and conclusion are correct.

Avoid simply stating the correct answer at the outset."""

),

HumanMessage(

content="""STUDENT ANSWER: {{student_answer}}

QUESTION: {{question}}"""

),

]

grade_prompt_answer_helpfulness = ChatPromptTemplate(grade_prompt_answer_helpfulness_messages)

def answer_helpfulness_evaluator(run, example) -> dict:

"""

A simple evaluator for RAG answer helpfulness

"""

# Get question, ground truth answer, RAG chain answer

input_question = example.inputs["input_question"]

prediction = run.outputs["answer"]

# LLM grader

llm = ChatOpenAI(model="gpt-4-turbo", temperature=0)

grade_prompt_answer_helpfulness_llm = llm.with_structured_output(Grade)

# Structured prompt

answer_grader = grade_prompt_answer_helpfulness | grade_prompt_answer_helpfulness_llm

# Run evaluator

score = answer_grader.invoke({"question": input_question,

"student_answer": prediction})

score = score["Score"]

return {"key": "answer_helpfulness_score", "score": score}

experiment_results = evaluate(

predict_rag_answer,

data=dataset_name,

evaluators=[answer_helpfulness_evaluator],

experiment_prefix="rag-answer-helpfulness",

metadata={"version": "LCEL context, gpt-4-0125-preview"},

)

Response vs retrieved docs

Here is an example prompt that we can use:

https://smith.langchain.com/hub/langchain-ai/rag-answer-hallucination

Here is the a video from our LangSmith evaluation series for reference:

https://youtu.be/IlNglM9bKLw?feature=shared

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.messages import HumanMessage, SystemMessage

from langchain_openai import ChatOpenAI

from pydantic import BaseModel, Field

class Grade(BaseModel):

Score: int = Field(..., description="Provide the score on if the answer hallucinates from the documents")

Explanation: str = Field(..., description="Explain your reasoning for the score")

# Grade prompt

grade_prompt_hallucinations_messages = [

SystemMessage(

content="""You are a teacher grading a quiz.

You will be given FACTS and a STUDENT ANSWER.

Here is the grade criteria to follow:

(1) Ensure the STUDENT ANSWER is grounded in the FACTS.

(2) Ensure the STUDENT ANSWER does not contain "hallucinated" information outside the scope of the FACTS.

Score:

A score of 1 means that the student's answer meets all of the criteria. This is the highest (best) score.

A score of 0 means that the student's answer does not meet all of the criteria. This is the lowest possible score you can give.

Explain your reasoning in a step-by-step manner to ensure your reasoning and conclusion are correct.

Avoid simply stating the correct answer at the outset."""

),

HumanMessage(

content="""FACTS: {{documents}}

STUDENT ANSWER: {{student_answer}}"""

),

]

grade_prompt_hallucinations = ChatPromptTemplate(grade_prompt_hallucinations_messages)

def answer_hallucination_evaluator(run, example) -> dict:

"""

A simple evaluator for generation hallucination

"""

# RAG inputs

input_question = example.inputs["input_question"]

contexts = run.outputs["contexts"]

# RAG answer

prediction = run.outputs["answer"]

# LLM grader

llm = ChatOpenAI(model="gpt-4-turbo", temperature=0)

grade_answer_hallucination_llm = llm.with_structured_output(Grade)

# Structured prompt

answer_grader = grade_prompt_hallucinations | grade_answer_hallucination_llm

# Get score

score = answer_grader.invoke({"documents": contexts,

"student_answer": prediction})

score = score["Score"]

return {"key": "answer_hallucination", "score": score}

experiment_results = evaluate(

predict_rag_answer_with_context,

data=dataset_name,

evaluators=[answer_hallucination_evaluator],

experiment_prefix="rag-answer-hallucination",

metadata={"version": "LCEL context, gpt-4-0125-preview"},

)

Retrieved docs vs input

Here is an example prompt that we can use:

https://smith.langchain.com/hub/langchain-ai/rag-document-relevance

Here is the a video from our LangSmith evaluation series for reference:

https://youtu.be/Fr_7HtHjcf0?feature=shared

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.messages import HumanMessage, SystemMessage

from langchain_openai import ChatOpenAI

from pydantic import BaseModel, Field

class Grade(BaseModel):

Score: int = Field(..., description="Provide the score on whether the retrieved documents are related to the question")

Explanation: str = Field(..., description="Explain your reasoning for the score")

# Grade prompt

grade_prompt_doc_relevance_messages = [

SystemMessage(

content="""You are a teacher grading a quiz.

You will be given a QUESTION and a set of FACTS provided by the student.

Here is the grade criteria to follow:

(1) You goal is to identify FACTS that are completely unrelated to the QUESTION

(2) If the facts contain ANY keywords or semantic meaning related to the question, consider them relevant

(3) It is OK if the facts have SOME information that is unrelated to the question (2) is met

Score:

A score of 1 means that the FACT contain ANY keywords or semantic meaning related to the QUESTION and are therefore relevant. This is the highest (best) score.

A score of 0 means that the FACTS are completely unrelated to the QUESTION. This is the lowest possible score you can give.

Explain your reasoning in a step-by-step manner to ensure your reasoning and conclusion are correct.

Avoid simply stating the correct answer at the outset."""

),

HumanMessage(

content="""FACTS: {{documents}}

QUESTION: {{question}}"""

),

]

grade_prompt_doc_relevance = ChatPromptTemplate(grade_prompt_doc_relevance_messages)

def docs_relevance_evaluator(run, example) -> dict:

"""

A simple evaluator for document relevance

"""

# RAG inputs

input_question = example.inputs["input_question"]

contexts = run.outputs["contexts"]

# LLM grader

llm = ChatOpenAI(model="gpt-4-turbo", temperature=0)

grade_prompt_doc_relevance_llm = llm.with_structured_output(Grade)

# Structured prompt

answer_grader = grade_prompt_doc_relevance | grade_prompt_doc_relevance_llm

# Get score

score = answer_grader.invoke({"question":input_question,

"documents":contexts})

score = score["Score"]

return {"key": "document_relevance", "score": score}

experiment_results = evaluate(

predict_rag_answer_with_context,

data=dataset_name,

evaluators=[docs_relevance_evaluator],

experiment_prefix="rag-doc-relevance",

metadata={"version": "LCEL context, gpt-4-0125-preview"},

)

Evaluating intermediate steps

Above, we returned the retrieved documents as part of the final answer.

However, we will show that this is not required.

We can isolate them as intermediate chain steps.

See detail on isolating intermediate chain steps here.

Here is the a video from our LangSmith evaluation series for reference:

https://youtu.be/yx3JMAaNggQ?feature=shared

from langsmith.schemas import Example, Run

from langsmith import evaluate

def document_relevance_grader(root_run: Run, example: Example) -> dict:

"""

A simple evaluator that checks to see if retrieved documents are relevant to the question

"""

# Get specific steps in our RAG pipeline, which are noted with @traceable decorator

rag_pipeline_run = next(

run for run in root_run.child_runs if run.name == "get_answer"

)

retrieve_run = next(

run for run in rag_pipeline_run.child_runs if run.name == "retrieve_docs"

)

contexts = "\n\n".join(doc.page_content for doc in retrieve_run.outputs["output"])

input_question = example.inputs["input_question"]

# LLM grader

llm = ChatOpenAI(model="gpt-4-turbo", temperature=0)

# Structured prompt

answer_grader = grade_prompt_doc_relevance | llm

# Get score

score = answer_grader.invoke({"question":input_question,

"documents":contexts})

score = score["Score"]

return {"key": "document_relevance", "score": score}

def answer_hallucination_grader(root_run: Run, example: Example) -> dict:

"""

A simple evaluator that checks to see the answer is grounded in the documents

"""

# RAG input

rag_pipeline_run = next(

run for run in root_run.child_runs if run.name == "get_answer"

)

retrieve_run = next(

run for run in rag_pipeline_run.child_runs if run.name == "retrieve_docs"

)

contexts = "\n\n".join(doc.page_content for doc in retrieve_run.outputs["output"])

# RAG output

prediction = rag_pipeline_run.outputs["answer"]

# LLM grader

llm = ChatOpenAI(model="gpt-4-turbo", temperature=0)

# Structured prompt

answer_grader = grade_prompt_hallucinations | llm

# Get score

score = answer_grader.invoke({"documents": contexts,

"student_answer": prediction})

score = score["Score"]

return {"key": "answer_hallucination", "score": score}

experiment_results = evaluate(

predict_rag_answer,

data=dataset_name,

evaluators=[document_relevance_grader, answer_hallucination_grader],

)